Mohammed Baharoon

Harvard Medical School, Harvard University.

mohammed_baharoon@hms.harvard.edu

Ahlan! I am currently a master's student in Biomedical Informatics at Harvard Medical School working with Pranav Rajpurkar.

I am interested in self-supervised learning and multimodal learning applied to medicine, with emphasis on computer vision and applications in report generation and referring segmentation. I am also interested in using AI to improve medical education. My work has been published in conferences such as MICCAI, ML4H, and MLHC.

Previously, I worked at the Vector Institute and the University of Toronto with Bo Wang, and at King Abdullah University for Science and Technology (KAUST) with Dominik L. Michels.

Outside of work

For fun, I love rock climbing, reading Middle Eastern history, drinking tea, and listening to classical Arabic music.

My Proudest papers

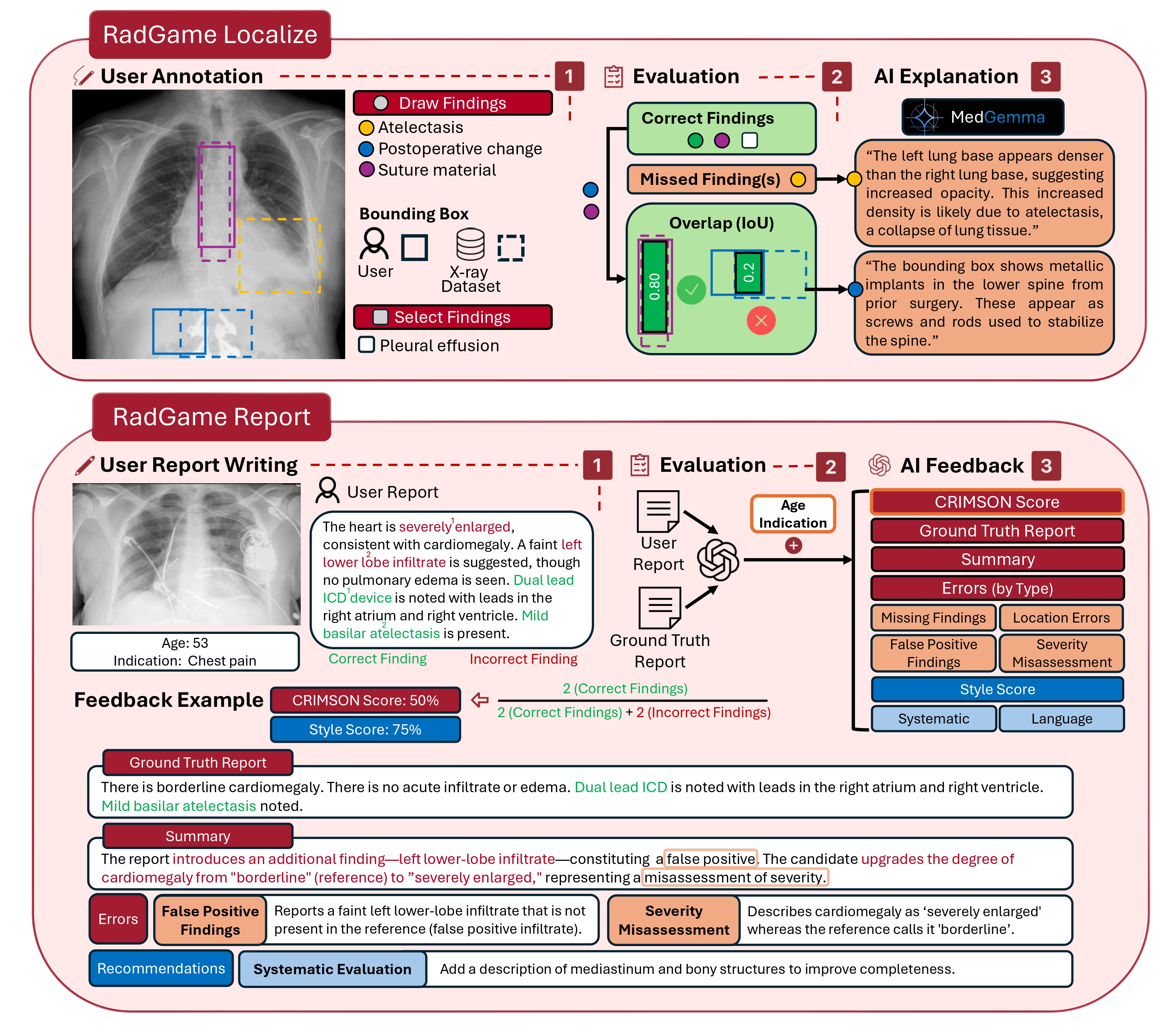

RadGame: An AI-Powered Platform for Radiology Education

RadGame is an AI-powered gamified platform for radiology education that trains learners on localizing findings and generating reports. The platform uses automated AI-driven feedback, comparing user performance against radiologist annotations from public datasets. In a prospective evaluation, participants using RadGame achieved a 68% improvement in localization accuracy (vs. 17% with traditional methods) and a 31% improvement in report-writing accuracy (vs. 4% with traditional methods).

Published in: Machine Learning for Health (ML4H) 2025, European Congress of Radiology (ECR) 2026

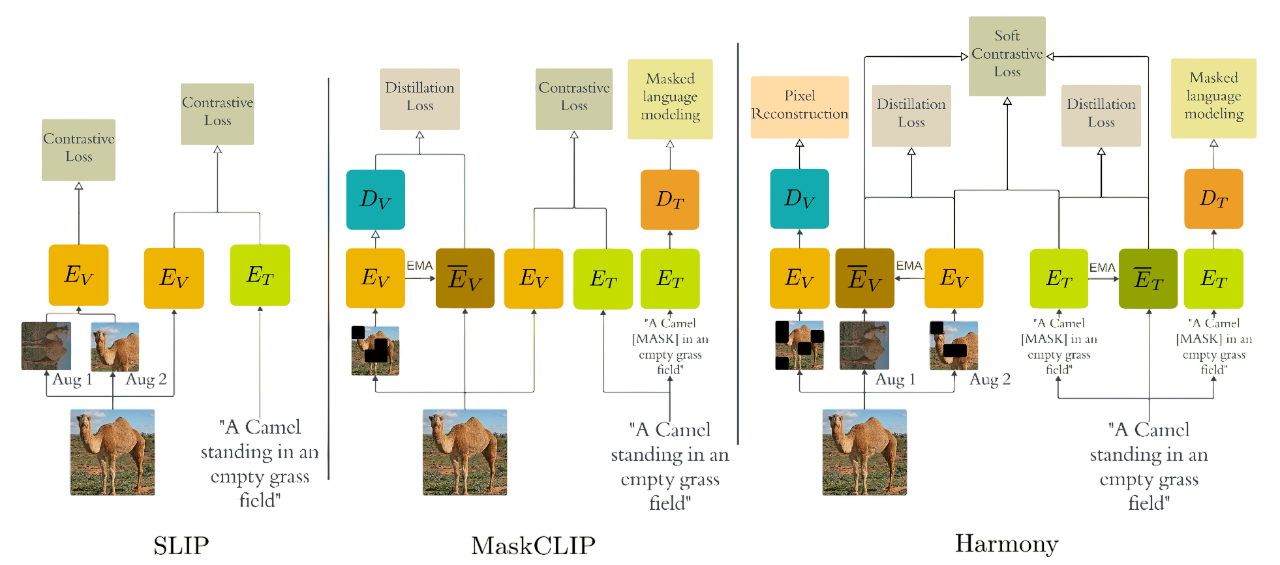

Harmony: A Joint Self-Supervised and Weakly-Supervised Framework for Learning General Purpose Visual Representations

Harmony combines vision-language training with discriminative and generative self-supervision to learn visual features that generalize across different vision tasks. Unlike CLIP, which lacks localized features for dense prediction tasks, Harmony optimizes five different objectives simultaneously and works efficiently on web-scraped data without requiring negative examples. Harmony significantly outperforms baseline CLIP and leading methods like SLIP, MaskCLIP, and DetailCLIP.

Published in: Transactions of Machine Learning Research (TMLR) 2025